How to Make Apps for Mobile and Wearable Devices HIPAA Compliant

One of the most pressing challenges for our constantly connected world is security, and in the healthcare industry, it’s vital. We’re writing a series of articles on HIPAA — the USA regulation providing the set of best practices to employ in your business if you deal, in any kind of way, with protected health information. And now, it’s time to talk about smartphones and wearables.

Four years ago, Goldman Sachs analysts predicted that the digital revolution can, potentially, save healthcare millions. According to them, the Internet of Things was a key to cost-saving in the industry through closing the gap between patients and doctors. Privacy, though, became a pitfall for IoT. Later in 2015, security researchers presented a case of 68.000 exposed medical systems they had found online at the DerbyCon conference. They got access to MRI scanners, archiving systems, and communication devices simply using Shodan, a search engine for connected tech.

It’s only got worse since then. The March HIPAA report stated that one million of people getting care in America have compromised health data and poor security in different smart devices is one of the reasons for that.

So, what HIPAA-compliant cyber hygiene businesses can apply if they want to prevent leaks and breaches and still squeeze the value out of modern technologies? Security Rule is pretty much the same for every device dealing with PHI — there are, though, some specifics we need to give a bit of a focus.

Three specific rules for HIPAA-compliant wearables and smartphones

1. Block lock screens and screenshots

There were quite a lot of HIPAA cases conducted after doctors published a screenshot of an application or of dialogue with a patient from a chat. To prevent them, make sure to block the possibility of shooting screenshots in an app. Also, it’s better to make splash screen appear instead of the app’s contents when users switch between different windows — it’s possible to screenshot a glimpse of an app when in-between.

2. Push-notifications should not contain any PHI

It’s important for an application and wearable surface not to put anything PHI-related in alerts and push-notifications. For instance, if users wait to receive their lab results, make sure to put “Your lab results are ready” in the notification. Then, users will have to unblock their device, authorize themselves in an application, and view the results. Nothing about blood glucose level, MRI scan findings, etc. should be previewed.

3. Do not store PHI on the device

There is a common safety practice we’re using as well: to separate database that handles the user base and the database that handles PHI data and encrypt both of them. We recommend not to store anything related to users’ health information on a device itself: there are HIPAA-compliant cloud servers for that (Microsoft’s Azure, AWS, etc.) It’s better to store only temporary files on a device — and to make sure its operating system (namely Android) doesn’t save them independently to its cash.

How to solve encryption vs performance challenge

Encryption is necessary if you want to create a secure and HIPAA-compliant environment for your product or service. But if working with web and desktop solutions you can enjoy the most complex encryption keys you want, mobile devices have limitations in that matter. The complexity of encryption (and decryption) relies on devices’ GPU capabilities and devices’ random access memory (RAM), and with hardcore encryption involved performance suffers.

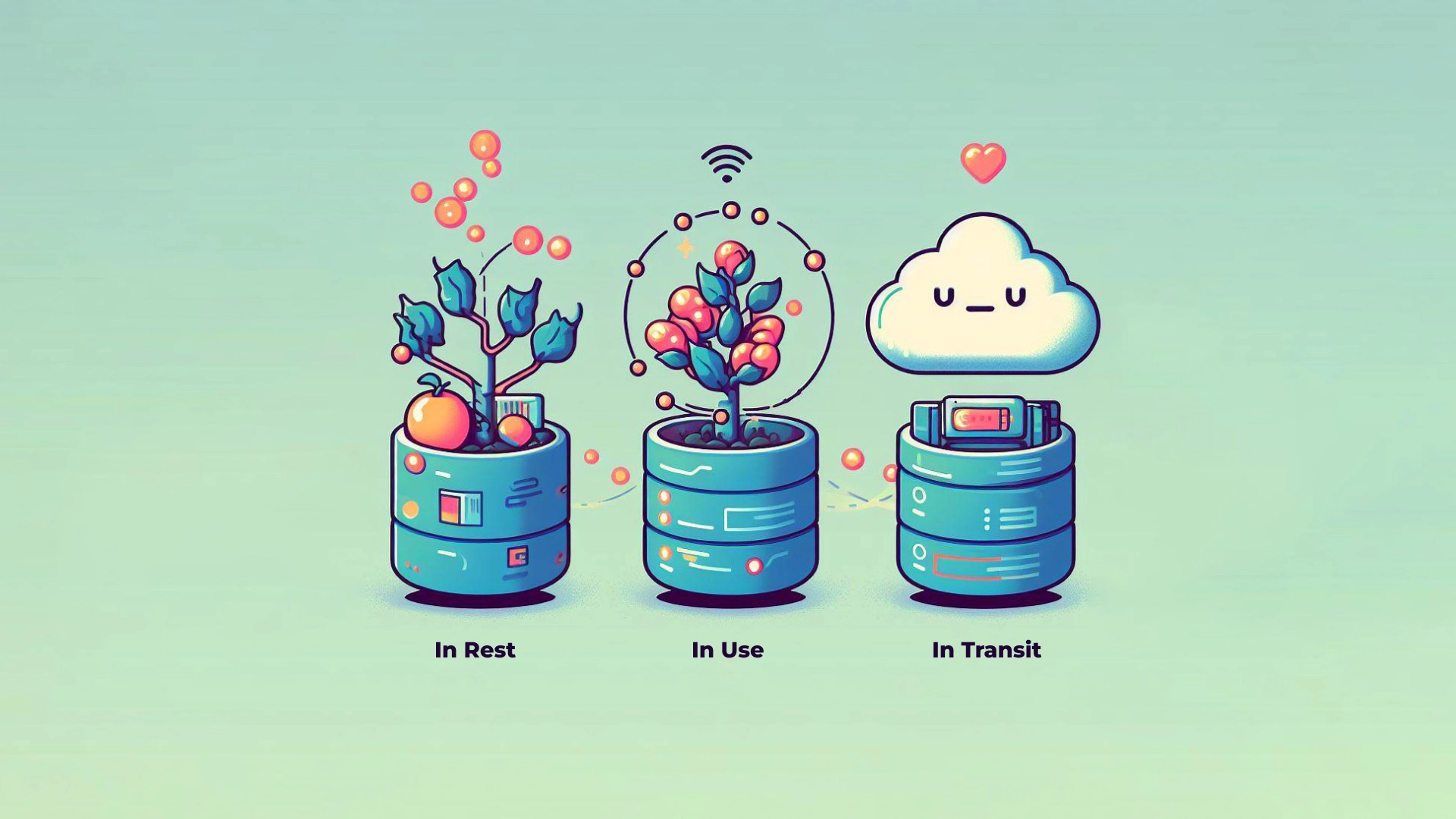

Let’s imagine you want to create a chat for a large hospital network. You need each message across this network to be encrypted (while in chat’s history or “in-state”), and you need them to be protected while it’s “flying” from one user to another (“in transit). To protect messages in transit, they need to be encrypted when “flying away” from the sender and decrypted, through a special key — algorithm for “deciphering” — when they’re “landing” on a receiver’s device. The more data you’re protecting in such a way — more messages are transferred throughout your application — more losses in the app performance you’re going to have.

What you need to do is to apply classic “just add another server” solution for scalability, but this server should be hosted in HIPAA-compliant cloud. Pretty much every popular cloud hosts now offer developers an opportunity to write on their basis a script that will allow to balance the performance issues out. When your application will be almost overloaded with messages, the script will launch a trigger that will turn another server on and the requests for encryption will be evenly distributed between them.

Note, though, that using HIPAA-compliant hosting solution doesn’t make your product HIPAA compliant: you still need to follow all the rules, run audits, conduct risk assessment reports, and so on.

My mHealth application/wearable doesn’t need HIPAA compliance… or does it?

For businesses that develop mobile applications for healthcare or wellness, it’s important to consider every possibility where your application may, accidentally, interact with protected health information in any way.

If your application doesn’t collect any data and just provides a chat for doctors and patients, the info patients sharing in the chat, including their past diagnosis and email, is considered PHI. That means you need HIPAA compliance and you need the same encryption, integrity, and authorization policies like every other connected to medical data device.

Plus, if your connected device is, for instance, blood glucose meter or EEG signal analyzer, it is possible it can be classified as a medical device. Medical devices are regulated by the FDA and this brings a whole new set of regulations (and the necessity of FDA approval for market distribution and success) on board. You can find a list of FDA-covered classification of medical devices.

Another “plus”: surely, HIPAA doesn’t come into play when your users use their wearable device/their app just for their personal needs. But if there’s any interaction between users, their personal data, and hospital or pharmacy (covered entities) or other software developers working within healthcare (business associates), HIPAA applies. And obviously, if you’re developing an app for a covered entity — for instance, to transfer real-time data from a wearable device to physicians’ for diabetes management, HIPAA also applies. HIPAA kind of inevitable if you want to work closely with any healthcare organization in America.

Workplace policies: about that BYOD

BYOD for “bring your own device” is an approach when medical professionals (or people working with medical professionals — software developers included) can use their own smartphones to do their job. And it’s quite common because, well, another device for each physician is expensive and pagers are not good. Smartphones get lost and stolen, hacked through public Wi-Fi networks, ads and emails, — without staff being properly trained in breach prevention, smartphones are a liability. Make sure everyone who uses smartphones or wearables that can get access to PHI are trained in cybersecurity: no birthday dates as a password, two-way authentication everywhere, no unblocked screens. Consider using remote wipe technology: it’s a way to erase or block all data in an app if the device it’s installed on is lost.

Tell us about your project

Fill out the form or contact us

Tell us about your project

Thank you

Your submission is received and we will contact you soon

Follow us