Conversational design for chatbots in healthcare

- What usually makes a good conversation?

- Agree that, from the machine’s point, humans are a mess

- Evaluate the complexity of communication your business and users need

- Develop chatbot’s persona and personality

- Tech kit for healthcare chatbot development

- Building a flow

- Assistants for healthcare professionals: a few notes

At the end of February, we’ve written an article on how voice interfaces can help clinicians speed up and optimize administrative processes during patient visits. Right now, most of the world is swamped with the pandemic, and lots of tech companies collaborate with health organizations to build AI solutions that help people check if they are in danger of having COVID-19. Chatbots are among these AI-driven tools. Case in point: the mental health chatbot market absolutely spiked, as people try to deal with their anxiety, panic, the necessity to self-isolate, and the fact that the world will never be the same.

In this article, we want to discuss the language of chatbots. The gap between the conversational flow we are used to and the stumbling, rocky dialogue with a machine is enormous. This gap is often blamed upon technical difficulties, but it’s actually the script issue. Let’s talk about what helps bots make a good conversation and how you can build it.

What usually makes a good conversation?

When you call your doctor and describe your symptoms, the goals for both of you are:

a) list all signs of illness you have,

b) figure out what is the most probable diagnosis,

c) figure out what to do next.

This is the basics, right? What we want is to know what we have and how we can get better and the doctor wants to help us.

Remember how we describe symptoms.

If it’s our family doctor, we tend to be emotional and dramatic, we refer to our previous consultations and make jokes.

We can afford being vague, describing our symptoms, and vague often means human: we might have not researched the difference between mild and severe coughing fits, but we describe how coughing proceeds - and the doctor makes their conclusions.

If we know what illness it can be - we can tell the doctor about our suggestions and if we spell the medical term wrong, no one will care. The doctor will ask for details. Additionally, the doctor can recall that you live in an industrial zone, that they’ve seen you travelling recently on Instagram. They can notice the tone of your voice to ask additional questions. Ask how you’re doing in general to gather more context for making a comprehensive diagnosis. And so on.

Then, they will tell you what’s going on and what to do. But how will they do it? They can schedule an appointment at the time before or after work. They can list medications with the same active compounds, but of different prices, and you’ll choose an option that won’t hurt your wallet. They will ask you to tell them if symptoms get worse, ask for updates.

This description is what our copywriter has come through recently, but, of course, it may seem too perfect; it’s not a universal example. The main point is, even if your physicians don’t engage with you as much as theirs and if you don’t tell them about last and sad pandemic memes, you still have a dialogue that is

- Cooperative,

- Goal-oriented,

- Context-aware,

- Error-tolerant,

- Turn-based, and

- Polite.

In the previous article of ours, we’ve mentioned: human language is inherently cooperative and can easily operate on imaginary fields. Erika Hall and her Mule team selected the characteristics above to conclusively describe what our conversations in our human language are (usually) like.

And these principles are what good chatbots have to possess. How can we help them do so?

Agree that, from the machine’s point, humans are a mess

A lot of businesses in healthcare kinda agree that chatbots and voice assistants can increase engagement, health awareness, trust between care organizations and patients, and so on. Being somewhat goal-oriented, chatbots visibly lack other features of human connection. We have to call Alexa multiple times to create a simple shopping list. People who installed Infermedica - a voice-based chatbot with symptom-checker that works similarly to lots of chatbots - said the technology is very helpful in tracking the changes in their health, but “tedious, because it requires each part (symptom, body part, intensity, date and time) to be recorded separately and goes through instructions every single time.” There’s no conversation, no continuity of dialogue. At the same time, people praised the fact Infermedica synchronises with data that is already in the voice assistant system and they don’t need to articulate their age or gender, as the system already knows that. Context wins hearts.

We perfectly understand struggles like that in our daily life, using chatbots, but often fail to incorporate solutions to them in our technology. And the solution for such a variety of how people talk, express themselves, and want to receive assistance is to acknowledge its inherent messiness.

There’s no simple way to recreate seemingly breezy scheduling interaction from real life in chatbots. There’s no simple way to create an experience that will resemble human experience. It’s complicated, requires lots of iterations, research and willingness to accept one’s mistakes and do better. You can't build a good chatbot via guessing and assuming about how people talk, because you don't know how, and the goal here is to make your chatbots and your voice assistants fluent in humans.

Evaluate the complexity of communication your business and users need

Business value of technology lies in the way it helps the business improve their customers' lives - and improved lives convert into profits. Healthcare customers have different needs - and their needs must define your chatbot’s main traits and features.

For instance, you discovered a need to remind patients about their meds due to adherence and re-hospitalisation levels. That is asking for a chatbot that will notify them about their meds, right?

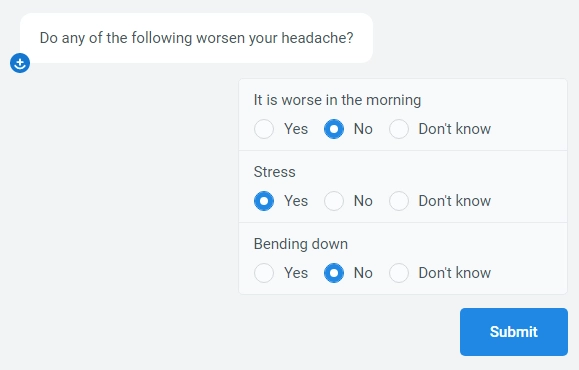

Simple button-based bot nails these needs: from the developers’ standpoint, it’s more affordable and easier to build. Writing scripts for such bots, though, is quicker as well, but not easy. You have to create questions and answers that will resemble a human’s talking. Note, that if you’re building a voice assistant, it’s not a good idea to include automatic-response-based “buttony” interactions. Remember how irritating it is to talk to bank’s phone bots.

If you want to build a bot a bit closer to humans, you need an open-text (or NLP-based) chatbot.

Develop chatbot’s persona and personality

Lots of disciplines related to product development use the term “persona.” Chatbots’ persona is a set of features that corresponds with users’ needs and interests, chatbots’ functionality, and a brand’s voice. In other words, the chatbot is an addition to your brand, a tool that makes some things faster and automates processes, and it needs to speak the same language as your website and your social media.

Unless your chatbot has a distinct, opposed personality, of course. E.g., if you have a super-serious website with a sophisticated knowledge base on different drugs, it might be an unusual, but valid decision to try out lively bot, speaking in clear, simple terms. (Needs testing.) Personality is how your chatbot behaves. Does it speak with an erratic energy? Is it calm and soothing? You decide - on the basis of research, of course.

Choosing a personality for your chatbot, make sure it corresponds with its functionality.

Few words on “trap of delightfulness”

Lots of designers - conversational designers, in particular, - often get themselves into a “trap of delightfulness” Hall’s describing. It’s creating amazing, bright things when there’s no need for them. In attempts to imitate a dialogue between two people, we find ourselves creating bots that send supporting emoji to people who’ve just checked their symptoms and it’s bad, utilizing exclamations (like “wow” and “yikes”) that may seem like A Human Thing To Do, but is ill-advised, when it comes to end-user. All the more reason to carefully consider every iteration.

Tech kit for healthcare chatbot development

The complexity of tech skills you need to possess to build a chatbot depends on how much of a natural language processing (NLP) technique you want to implement. Button-based chatbots, as we’ve mentioned, do not need sophisticated algorithms as a basis, rule-based instructions are sufficient enough. Replika and more complex, oriented, as an example, on mental health management, bots function on the NLP. Let’s make a quick dive into that.

Chatbots use, process, and analyze three types of data.

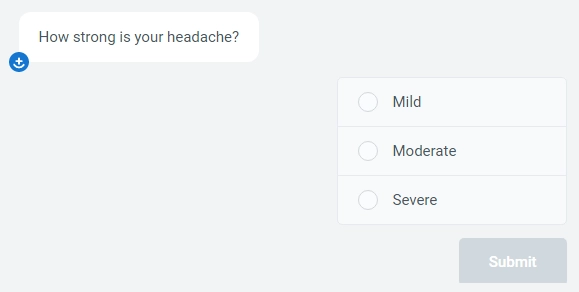

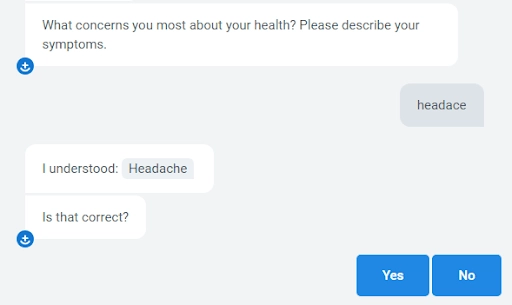

Utterances are incoming data: phrases, questions, emojis and any other user’s input you can think of. They are also called variations. As you understand, in button-based chatbots users select the questions from the pre-written list, and every answer corresponds with several dedicated responses. Take a look at this example:

In NLP-based chatbot instead of options we’ll have user’s input, for instance, “It hurts to breathe,” “wild,” “really strong,” etc. The variety is what makes building a good, complex chatbot so sophisticated.

Intentions or intent is what user meant, what is the semantic direction of his answer, its theme. All previous descriptions refer to severe or moderate headache, but all of them may get a different answer from bot, depending on the analysis of the user’s intent. You can teach your bot to be compassionate, kind, and understanding using different words. Building an NLP-based chatbot you need to find out - through user research, testing, and feedback to bot’s messages: what answers make people who say their headache is wild, feel safe, encouraged, calm (whatever your chatbot’s personality and goal is).

There are existing third-party tools - machine learning frameworks, that help you train bots to classify intent and other tweaks and subtleties of the language through machine learning. Google’s Dialogflow, IBM’s Watson, Amazon’s Lex and others are among them. They have ready-to-use code your developers can utilise, which cuts out time of development. You can also build these ML tools in-house - the main languages for ML solutions are Python, Java, PHP, etc.

Entities are points of data for algorithms to refer to in users’ questions. In our headache case, the phrase “It hurts to breathe” can get a chatbot to cross-reference “headache” and “breathing issues” from scientific articles. Then, it can get semantic matches from a database of actual conversations. And finally, it will generate a response.

The complexity of NLP-based bots also varies. There are

- Chatbots that get semantics (what the query means in different contexts), text structures, and can recognize phrases.

- Chatbots that recognize morphemes - words.

- Chatbots that recognize and understand slang and learn continuously, adjusting its language and behaviour, enhanced through sentiment analysis.

For the first two types of bots you need to prepare a large amount of unstructured “talking”, feed it to the algorithms, and switch between unsupervised and supervised machine learning until error rate reduces to an acceptable percentage. For the last, you need sentiment analysis and deep learning.

Datasets for training differ, depending on the main function of your chatbot. If you want it to be a doctor’s virtual assistant, feed algorithms corpus of medical terminology, scientific articles, interview as many doctors as possible about their jargon, slang, common words-reactions. If you want to build something for users, consider going for samples to Twitter, context-free datasets of other chatbots. Remember that both doctors and healthcare end-customers are people, start listening closer to how you and people around you talk. It will help in our next stage.

Building a flow

To build a cool, not irritating bot, you need to transfer all qualities of a good conversation we’ve described in the beginning to your scripts/scenarios - and train it to use it in conversation with users.

Let’s see how to do it.

Teach your bot to act differently depending on user’s emotional responses

Dialogue with humans is cooperative and, in general, participants often work towards understanding and more or less positive emotions. So a good idea for a bot to train continuously, analysing responses on their messages and ranking them. It seems like such an obvious idea - not to use responses that caused negative reactions in users’ anymore, - but bots often lack “memory” and, therefore, context awareness.

To make this happen, you should install the feedback-gathering system into your interface, as a hardcore option. Or: rigorously train a bot to recognize and understand negative responses - and to react to them appropriately. So, add swearing, refusals, and heated angry exclamation points to your scripts. You can’t avoid them - so it’s best to teach the bot how to react to them.

It also works backwards: teach the bot how to react to positive responses as well, and it must not be lame. “It’s great” and “thanks for the help,” “this is very good” are good indications of a nice talk, but they often make users stumble between the want to continue talking and the want to stop. We are sure, it has happened to some of you in real life: some praises (directed both at you and by you) are extremely awkward. Dedicate time to reduce “awkward pauses,” and teach the bot to engage and re-engage with a user in different ways.

It makes sense for self-help chatbots, as people who use them often suffer from anxiety and low self-esteem, so awkwardness in an interaction with a BOT won’t make the day better for them.

Avoid echoing

So, imagine this dialogue:

- User: Hi, I need to visit a doctor.

- B: When do you want to visit a doctor?

Or:

- User: Hi, I want to order a pizza.

- B: Hi, what pizza do you want?

Echoing users’ responses - or echoing context - is a very lame habit for the bot. In real life, no one repeats your exact wording to you, unless they didn’t understand you, couldn’t hear you, etc. Echoing is sometimes considered as a demonstration, that bot understands context, but, unfortunately, that is not the case.

Enhance the context

If your bot doesn't echo the context, it makes it more… contextual. And it’s natural. Let’s take a look.

- User: Hi, I want to order pizza.

- B: Hi! Make your choice.

Or:

- User: Hi, I want to schedule an appointment with my doctor.

- B: Hello. What date do you have in mind?

Or, even better:

- User: Hi, I want to schedule an appointment with my doctor.

- B: Hello. What date do you have in mind?

- U: This Wednesday?

- B: Here are the free time slots.

- U: <14:00>

- B: Great, I’ll tell her to expect you.

- B: Has anything happened?

And, well, you’ve got the point. While we’re perfectly aware bots are bots, and usually, that’s why it’s great to talk to them - they are not humans, they can’t judge, etc, - we still want our conversations to be as human as possible. People around us remember the context of our conversations (Ideally.) Good healthcare chatbots should encode at least some part of recent dialogue history and the user’s social data.

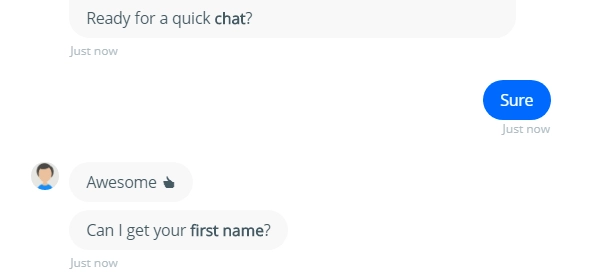

Here’s the example from gallery collect.chat where the bot we’re making an appointment with does not gather the context:

The wonderful thing about chats that are integrated with messaging platforms like Facebook Messenger or Telegram is that they have access to users’ social data - thus, they can start with context-wise greetings. Same works for chatbots that are integrated with clinics’ websites and different patient portals. They know what the user’s name is.

You can play with this context - “May I call you Naomi?” - but it’s a weak move to ignore it.

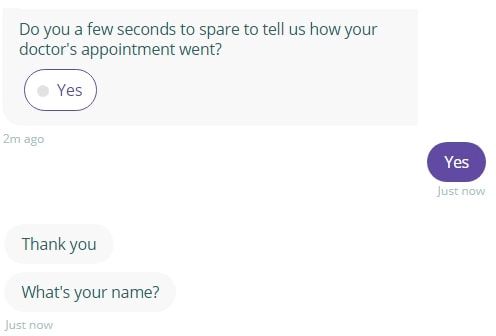

Another example of not getting a context: first, the bot asks for our feedback on the doctor’s appointment, and then it… asks our name.

From the type of questions, we assume it will be based on our previous interactions with organizations and/or systems, but our expectations do not confirm. If you want to build a chatbot that is an extension of CRM/EHR/patient portal, make sure you’re utilizing data that a) went through the system, b) went through the chatbot.

Chatbots that understand the context of ecosystem users interact with are powerful and useful, because they act as helpers, both, in that case, for doctors and for patients. Chatbots who don’t do the invisible work of contextualizing usually irritate.

Construct a polite and concise dialogue

The example above hits another “no” of conversational UI: its interface implies a choice, but does not offer it. That creates an illusion of choice and is very freaking rude.

The useful thing to research to construct dialogue that doesn’t do rude is UX microcopy guidelines. Among these, there are, for instance, such rules:

- Do not use “please”, if “please” doesn’t imply a choice.

- Use active voice instead of passive voice, when possible, because it makes conversation personal and dynamic. (Not “appointment is scheduled,” but “I scheduled a visit at…”).

- Avoid the word “remember”, unless you want your conversation to be reminiscent. (Not “Remember this password,” but “use this password,” not “remember to take your meds,” but “Don’t forget about #medication name#”)

And so on. Apart from that, it’s considered a gold standard to make bot’s responses fitting in one-three lines in the dialogue, but it depends on functionality. If you want your bot to be able to tell a story, shoot. Just don’t forget to re-engage users time from time, making sure they are there.

Be cautious, though, of the trap of delightfulness. Good, calming story from a chatbot fits as far as it’s justified by chatbot functionality. No need to make, for instance, a symptom checker fancy.

Choose chatbots’ tone carefully

Apart from that, here are some thoughts: how often do you ask people for a “few seconds to spare” - in that exact wording? This is formal language: while it really looks okay in a written form, we inevitably associate it with official, serious settings. While your chatbot should understand formal tone perfectly - because people are more formal when they’re texting than in spoken dialogue - it’s a choice of chatbot’s personality of whether it has to talk like that.

In general, it’s better to use simple words even if users (if they aren’t doctors) use sophisticated one.

Also, it’s a good practice to avoid passive-aggressive tone (“I see you didn’t fill your charts”) and anxiety-inducing wording (“Sounds bad, isn’t it?”) in chatbots of any personality.

Make it error-tolerant

Today, we’re absolutely taking for granted how easy it is to google something, but the search engine has one of the best conversational designs in the world. Google is remarkable in understanding users’ intent: of course, it remembers our previous search queries and is extremely tolerant of our typos and errors. Use Google as a height to reach for. Here’s how some of the chatbots are handling it:

In the perfect world, the system wouldn’t wait for confirmation and get to the point of further symptom assessment, but it’s good anyway.

Don’t put a burden of not understanding the question on the user

“I didn’t understand that.” How many voice assistants say this phrase? Like… All of them?

It’s easier in text because you have the opportunity to state, explicitly, that the bot doesn’t get what users’ saying - and train the bot to try helping the user in another way, using the data from the context. In general, the phrase “I didn’t understand that,” is a conversation killer if it’s not followed with a phrase that helps the user feel relieved, not completely misunderstood and abandoned.

Be consistent and honest

There’s a certain point where Symptomate’s bot we’ve used to illustrate some of our points suddenly changed the pace of the conversation.

At first, the bot asked us one question at a time, and then it suddenly switched to absolutely another format and tone of the questions. Tread carefully with changes in conversation flow, and, ideally, warn users about them: it’s also a question of politeness. Remember, the rule of turn-based flow? Don’t disrupt it so suddenly.

The last thing we wanted to recommend is to always install preventive measures against self-medication in your chatbot, whatever its complexity is.

Assistants for healthcare professionals: a few notes

All recommendations mentioned above work for doctors- and nurses-oriented chatbots, too (especially the points of politeness and tone!). But there are few other things to consider when building for healthcare.

You must have proof of reliability. If it’s an AI chatbot that helps people make decisions and search for answers, you have to provide them with evidence it answers correctly.

Clearly divide “fun” and “interesting.” Interacting with an assistant, healthcare professionals don’t want to have fun. But they do want the experience to be interesting, understandable; for it to offer an angle they didn’t consider before.

It’s very hard to build truly “intelligent” assistants for doctors because chatbot has to skip “obvious” answers - they are really very irritating for people who worked in the industry for years - right to the answer doctors don’t know. And it should be short, clear, and validated clinically.

For that to happen, you have to very closely collaborate with doctors (or other healthcare professionals) and build the bot with them.

Our experience with chatbot development and final thoughts

Should you build a chatbot for your healthcare business? If you have the answer to the “What issue this chatbot solves faster than anything else?” - you certainly should.

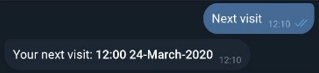

Case in point: one of our clients is a clinical trial business. For such businesses, it’s very common to spend enormous amounts of money on drug discovery and participant recruitment - and later fail to retain them, because they forgot about the scheduled visit, didn’t have transportation money, or else.

A chatbot we’ve built for them operates in Telegram and in dedicated mobile applications. It reminds users’ about their medication and scheduled visits, offers help in transportation and connects them to the company’s professionals.

Through the bot, they also track their mood and physical state - and see their progress in real-time, see if there’s something wrong and immediately act on it. The app and chatbots went into production just a few weeks ago, but everything’s working really well so far. We used PHP, Java, and Flutter to build it: it’s button-based right now, but we’re already working on the preparation of NLP, hey! (We’re really happy about it.)

Tell us about your project

Fill out the form or contact us

Tell us about your project

Thank you

Your submission is received and we will contact you soon

Follow us