Software Testing in Startups: Basics, Principles, Common Mistakes

“Fixing bugs” is not testing. “Testing”, conducted by developers, is not testing. Testing is not about “clean product.” So — what is it? Let’s talk about the main terms and processes from the world of mysterious quality assurance and bust some misconceptions about testers and what they do.

Quality assurance, quality control, and testing

If an app you’ve built is clear, understandable, purposeful, and users are comfortable and satisfied with its features, this app is of high functional software quality. Which means software corresponds with the requirements of its end-users.

If your app is secure, and its code is well-structured and understandable, this app is of high non-functional quality. Which means an app was built with the best engineering practices in mind. If transferred to other development teams, it's code will be clear and understandable for them.

Quality management regulates software quality. Its goal is to make sure the best possible version of an app is shown to end users. To make it happen, quality management professionals are occupied within three activities: quality assurance (QA) that includes quality control (QC) that includes testing.

Quality assurance is a set of activities that a QA team, in collaboration with product (or project) managers, executives, and stakeholders perform to establish standards of quality management: what to check, when to check and what to do to prevent errors from the very beginning. QA focuses on processes of establishing quality.

Quality control is, by definition of Google Test Blog, “is a concrete world”, which is included in the “meta” world of QA. Quality control is activities that check software against the chosen requirements of quality. Quality control is usually performed at the end of the product development cycle, at the end of a sprint, or every day — in the process of code writing — depending on the type management your team employs.

So, rules for quality control is established through quality assurance. The main tools of quality control are tests. So testing is the most low-level terms of three. Now, what do people think of testing — and how is testing being done in reality?

Main mistakes in testing vs. principles of testing

“Our developers tested that app and everything’s ok.”

There’s a popular, yet unreasonable belief that testing should be done in a controlled, sterilized environment. But the truth is the more similarities the testing environment has with a production one, the more it emulates real-world settings, the more accurate the testing. Meet testing principle #1: Testing is context-dependent.

There are no one-test-fits-all solutions: different test types on different levels through different methods are used depending on a product.

The best analogy here is with a product release. You won’t know if your product is needed if you don’t talk to your customers (not your friends and relatives and supporters, which are kind of associating with the controlled, friendly environment). And you can’t use, for instance, similar promotion tactics to sell on B2B and B2C markets.

Subsequently, if you’re making an e-commerce app, your test set will be different than your test set for, for instance, a healthcare app. They have different priorities. All healthcare software is safety-critical and requires following specific compliance rules — healthcare application shouldn’t “remember” user’s login and password. E-commerce app, on the other hand, is a different story.

Plus, there’s a confirmation bias and “everything is working on my computer” bias. Even if everything seems fine, developers and project managers, who are involved in the release process deeply, may miss parts of the high-level view of a product — a perspective of end users.

Quality assurance specialists advocate customers’ perspective on usability. Their test strategy is explicitly oriented on making sure the entire product is valuable and understandable for its users.

In addition to different biases of familiarity, if tests are written and performed by the same people all over again, there’s a risk to face a testing principle #2 — pesticide paradox. It says that when test cases are repeated multiple times a lot, there’s a possibility that they will stop finding new bugs. Thus, updates for them are needed.

Also, a good testing practice is to test for what is missing in the product because sometimes a product’s flaw is not in a feature — but in the absence of a feature. Testing teams conduct tests for what’s not there with the help of their other departments or of early users.

“We can’t test everything because we don’t have resources - so we won’t test it at all.”

We heard that kind of black-and-white thinking from small perfectionists teams. There are two main problems with that statement.

First is that exhaustive testing is impossible — that’s testing principle #3.

Second is there’s actually no need to test everything. There’s a need to do a sufficient effort. What does it mean? You don’t want — and can’t afford to, actually, both in mental and in financial sense — to test every input and precondition.

What you need to do is to see where are the risks and what are the priorities for a user — and to vary testing efforts, depending on what you’ve got through rigorous risk assessment and customer research. To act on them (Can the app stand 1000 online users? Does its interface look good when perceived outdoors? Where does it collect data and how easy is it to catch them if users use open WiFi-connection?), you’ll need different types of testing.

There’s another side of this “wanting to test everything” thinking. When a product does get tested and your team finds a lot of bugs and concludes from their extreme quantity that testing is good. However, a lot of fixed bugs doesn’t always mean a good product. Because guess what, testing principle #4: testing shows the presence of defects. It cannot prove that there’s none of them; “no bugs” is not proof that a product is perfect. And, the more you test, the more bugs you find.

To broke the circle, quality assurance teams gather data about defects, software areas where they can occur and test categories (or types) they fit into. All that information is collected into a test plan. If a test plan is written correctly, with both customers and risks in mind — testing teams stop when the plan is executed. Then, they test it in an environment that is close to the production environment — to ensure a product is usable and good for end users. And they continue to test it after production, in order to not to fall into testing principle #5: the absence of errors fallacy.

Test plans of high quality, though, help use resources more efficiently and recognize clearer performance indicators for testers’ responsibilities. Plus, testers can update and renew these plans if something that is missing was found and act on it quickly.

“We’ll test after product is ready — right now there’s nothing to test”

Testing is a process that should take place in every part of the product life cycle and, on the contrary to the popular myths, the absence of a product is not a reason not to test.

Testing isn’t about checking if code is right and if an interface is looking ok. Everything your team produces can — and, in the perfect world, should — be evaluated and verified accordingly to certain success criteria.

So, apart from the code, quality assurance management develops and executes strategies for testing product concept and architecture, service techniques that will connect you to your end user, methods of errors detection, user journey flows, etc.

Most of these things aren’t operating in code — and by focusing on code solely you’re losing chances to maintain quality control in your company from the start.

The consequences are rather not good — when you’re testing right before the product release and checking only the code quality, you are at risk of missing flaws that have occurred before the development stage.

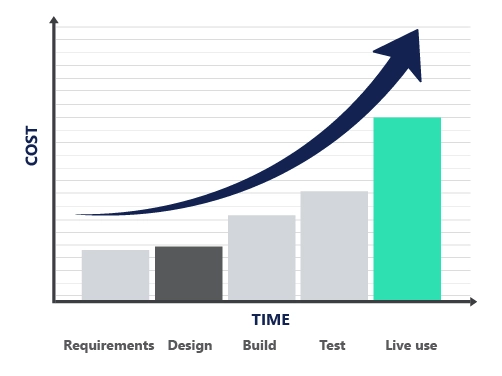

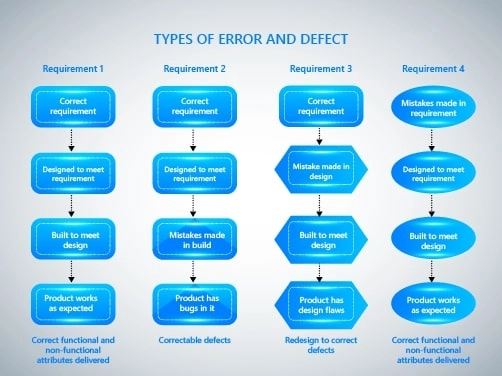

You can see the options of what can happen if you do so above. Thus, testing principle #6 is the early testing.

The earlier you introduce quality assurance in your management model, the better: professionals will help you cover areas-to-test you’ve overlooked and you’ll reduce risk of something unexpected and unpleasant occurring at the late stages of the production cycle.

By the way, “something unexpected and unpleasant” always occurs before release in testing.

It’s the phenomena of defect clustering — testing principle #7. The simple explanation is that bugs come in clusters — like misfortunes — and these clusters are usually discovered in the small number of modules right when everything seems to be ready for release. In defect clustering, the underlying rule is the Pareto principle or 80-20 rule: 20% of the efforts create 80% of results. By analogy, 80% of bugs hide in 20% of code.

Why is it important? When a product is complex and its code is sophisticated, QA specialists cover most problematic areas of code when they plan to test, and focus their efforts, saving you, once again, time and money.

Basics of testing processes, testings types, methods, and levels

As we’ve already said, businesses tend to oversimplify testing. We addressed common mistakes — now, let's talk about what QA department work looks like.

Let’s say you’re planning to check if the smartphone you're going to buy is working properly. Things you’ll do are very alike with testing processes — excluding the fact that there’s no testing documentation which can be used by other people later and thus has to be carefully and understandably written. So, what’s quality assurance process includes?

Software testing: test planning and control

You can’t test all the features on that phone at once — but you don’t need it. While "testing" you try to spend minimum resources and gain maximum value: to know for sure if your phone is usable and comfortable for you.

Software testing strategy defines what are the goals of the testing process, and how and at what cost a team can achieve them.

In the smartphone metaphor, a test plan would be a detailed roadmap of checklists and standards your phone should be compliant to, and scope of the main features that are important for you in the phone, plus things that are nice to use or to look at. Generally, it's a list of things that helps you to know if you're making the right choice and methods to confirm it.

Requirements for the software test plan are defined in the IEEE standard for software test documentation, but it’s not obligatory to use them. A lot of companies who use super quick management methodologies to develop their products employ their own approaches to writing a test plan.

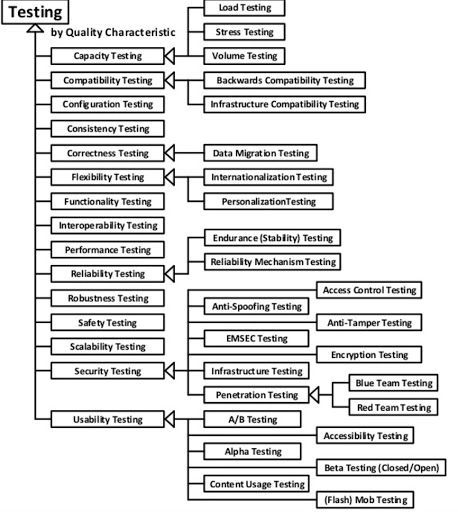

Testing types. In the test plan, testing types are defined. There’s a whole bunch of different test types. For instance, you’ll want to use functional testing if you want to see if your smartphone will recognize your voice command; use case testing if you want to check if you can take a photo and then import it to Instagram; performance and endurance testing to see how many windows a smartphone can keep opened and doesn’t flinch. Here are some of them.

All of these test types are performed through different methods and on different levels of testing — these details are also pointed out in a test plan.

Testing levels. Levels of software testing describe different areas of software or a system where testing should be performed. There are four main test levels.

- Unit (or component) testing tests the smallest pieces of software — for instance, modules and classes — separately. It is, unlike testing on other levels, performed by developers.

- Integration testing tests the connection between units and checks if these units are ready to be assembled in a system.

- System testing checks the system as a whole and verifies its compliance to requirements that are pointed out in a test plan.

- Acceptance testing validates the product against the requirements, defined in product specification, and checks, if it corresponds with the user needs accurately.

Testing methods. Methods describe how exactly quality control specialists test a product: with knowledge about product structural specifics (white box testing), without knowing about its internal structure (black-box testing), or combining these two approaches (grey box testing). Instructions for these tests are outlined in testing documentation. Another method of testing, ad hoc, doesn’t require any planning or specs and doesn’t have clear exit criteria: tester simply “surfs” the product, paying attention to things that are out of order. We call it monkey testing.

Software testing: stages from analysis to execution

After the test plan is ready, the next stages await.

Analysis & design. At this step, all approaches and techniques that are needed to fully meet testing objectives that are pre-defined within the strategy should be translated into the test design and procedures. Here, a team of QA engineers get themselves familiar with requirements and specifications and start creating tests before the code is written — for black-box testing, for instance — using developers' documentation.

Then, it’s necessary to choose test conditions, depending on what’s going to be tested, what are the specifications of a tested element, what is its structure, and how it must behave. Then, on the basis of this information and data that is needed to begin the testing process, the team designs test cases. A test case is a scenario, a set of conditions that are applied to a unit or a system to see if it satisfies requirements for them: in other words if they work as they suppose to.

After that, the QA team constructs a testing environment — which, as we’ve already said, should resemble the real world, production settings, as much as possible. Note, that it should reproduce both hardware and software that are used by end-users.

Now, along with dynamic testing, which types, levels, and methods we’ve briefly described above, there’s static testing. The first one covers QA responsibilities that occur when some parts of the product already exist, to test them "in motion". The second one corresponds with pre-release reviews of a source code and team reviews, it also called verification testing. It’s the least expensive and least deep type of testing.

Implementation and execution. During that stage, QA specialists implement and prioritize test cases and put the results of testing in the documentation, along with fixes and requirements for new test cases, updates, etc. There are manual and automated testing, which fit into different types of scenarios. For instance, automated tests are perfect for performance and load testing, but in areas of testing that need one- or two times checks or Human Intelligence and Capacity to Understand Others (and by others we as usually mean customers), manual testing is required.

Evaluating exit criteria and reporting. In that step, testers check the results of their activities, see if they fit into expected results, and conduct summary reports for executives and stakeholders. Exit criteria are usually pre-defined in the test plan — they show to QA engineers where lays the line of “enough testing.”

The importance of quality assurance is often overlooked due to lack of resources or due to misconceptions about what QA is. We hope, this article sheds some light on it. Businesses lose billions of dollars due to mistakes in their software. Quality always requires resources, both time and talent, but it worths a shot: without testing, there will be no profitable product.

If you want to hire a dedicated software development team to build or test your software, contact us — and subscribe to our newsletter below.

Tell us about your project

Fill out the form or contact us

Tell us about your project

Thank you

Your submission is received and we will contact you soon

Follow us